How to Install Ollama for Machine Learning Models in Your VPS

Leave a comment on How to Install Ollama for Machine Learning Models in Your VPS

Machine learning (ML) has become an essential component of modern computing, enabling activities such as natural language processing, image recognition, and data analysis. However, executing machine learning models locally can be resource-intensive, so a Virtual Private Server (VPS) is an interesting option. In this post, we’ll show you how to install Ollama, a lightweight tool for running ML models efficiently on your VPS.

What is Ollama?

Ollama is an efficient tool that facilitates the management and deployment of machine learning models with easy setup. Its straightforwardness and adaptability make it a preferred option for developers looking to experiment with ML models without the hassle of intricate configurations.

Installation

Here, we are using Ubuntu 22.04 server.

Step 1 – Update and Upgrade Your VPS

Update all the System Packages to make sure your Ubuntu server is up-to-date.

sudo apt update && sudo apt upgrade -yStep 2 – Install Python and Pip

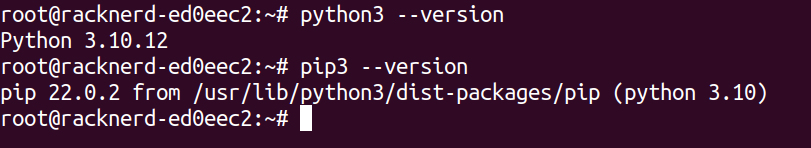

Ollama depends on Python, which means you’ll need to install both Python and its package manager, pip.

sudo apt install python3 python3-pip git -y

python3 --version

pip3 --version

Step 3 – Install Required Dependencies

Some additional libraries may be needed to support Ollama and the machine learning models.

sudo apt install build-essential libssl-dev libffi-dev python3-dev -yStep 4 – Download and Install Ollama

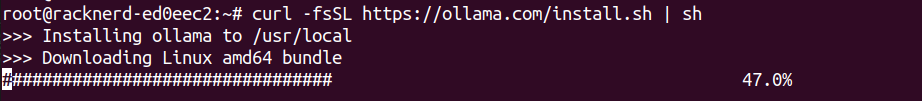

Download the Ollama Installation package and install using the following command:

curl -fsSL https://ollama.com/install.sh | sh

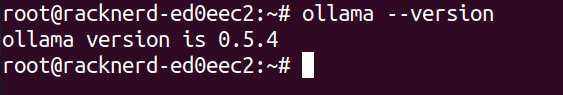

ollama --version

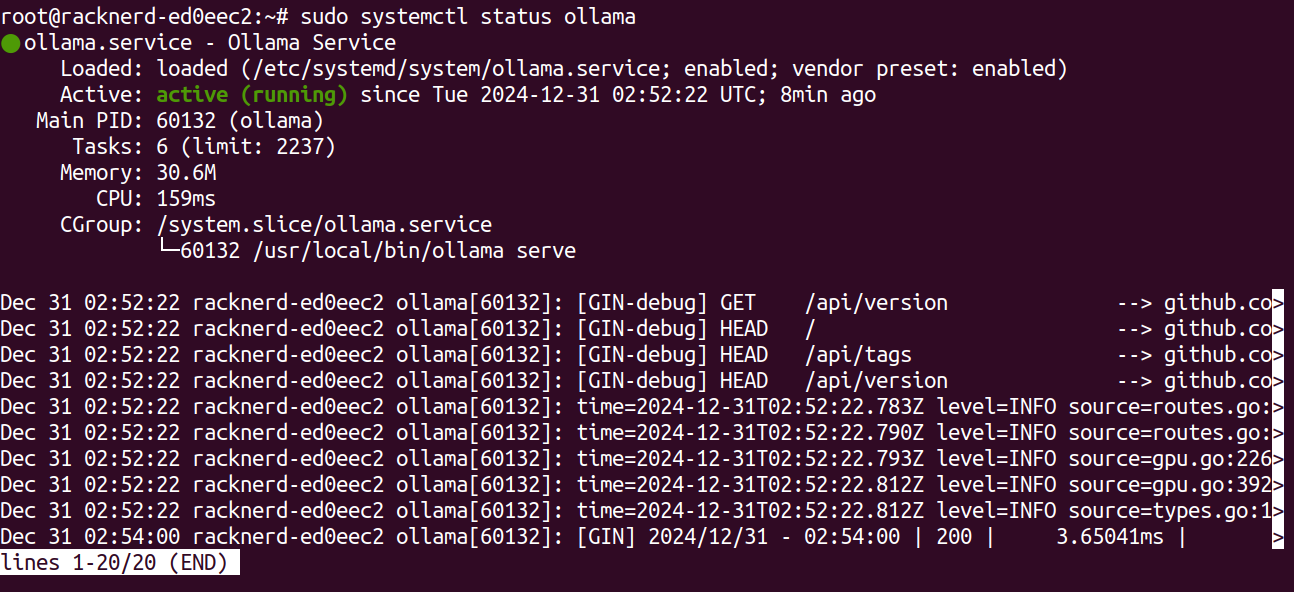

To confirm everything is functioning properly, try running the following command to see if Ollama is responding:

sudo systemctl status ollamaAs you can see the Ollama is installed and running properly.

Now, let’s see some commands that can be used with Ollama in the terminal:

List Available Models

ollama list

Running a Model

ollama run <model_name>

Show model information

ollama show <model_name>

Stop a running model:

ollama stop <model_name>

Push a model to a registry

ollama push <model_name>

Pull a model from a registry:

ollama pull <model_name>

Monitor Running Models:

ollama ps

Conclusion

Installing Ollama on your VPS allows you to run machine learning models efficiently, even in a resource-constrained environment. Following a simple installation process, you may quickly interact with a number of models, allowing you to leverage the potential of large language models (LLMs) for a wide range of applications. Although your VPS is running in CPU-only mode, Ollama nevertheless provides powerful functionality, making it a versatile solution for developers and data scientists.

By following the installation and configuration steps, you may rapidly begin using machine learning models on your VPS. Remember that you can always expand your setup with more models and configurations to meet your growing needs. Whether you’re experimenting with models or developing production-level applications, Ollama offers an accessible and powerful toolkit for modern AI workloads.

Ollama allows you to explore the field of machine learning in a low-cost, easy-to-setup environment on your own VPS.